NIST Gen AI Profile

It is 2025 and the landscape of generative artificial intelligence (GenAI) is both exhilarating and exhausting. Conversations around GenAI are fraught with complexities that stem from various layers of AI solutions. One of the primary challenges is the widespread lack of understanding of the risks associated with AI. Many individuals and organizations struggle to grasp how AI systems are built and trained, often overlooking the nuances of probabilistic results and state space. This gap in knowledge can lead to unrealistic expectations and misinformed decisions.

The constant fear of missing out exacerbates the situation. Organizations face immense pressure from multiple sales pitches from AI vendors, board-level discussions (often misinformed or based on marketing) about the potential of GenAI, and the pervasive narrative of "productivity gains" promised by AI.

Understanding the first-order effects of GenAI in human-driven workflows is challenging enough. These effects include immediate changes in productivity, decision-making processes, and task automation. However, the second-order effects—those indirect consequences that ripple through systems and society are even more elusive. These can encompass shifts in job roles, alterations in organizational structures, and broader societal impacts. The complexity of these second-order effects makes it nearly impossible to predict all outcomes, underscoring the need for continuous monitoring and adaptive strategies in AI risk management.

The News

A recent study by Microsoft Research explores the impact of generative AI on critical thinking among knowledge workers. The survey of 319 participants revealed that higher confidence in GenAI correlates with reduced cognitive effort and critical thinking, while higher self-confidence in personal skills leads to more critical engagement with AI-generated outputs. The study highlights a shift in critical thinking towards tasks like information verification and response integration. These findings underscore the need to probe deeper into the second-order effects of GenAI on our workforce (and by extension, our society), as we are only beginning to understand how these technologies influence cognitive processes and decision-making in professional environments.

A Bloomberg analysis highlights the pervasive issue of bias in generative AI. The study found that AI-generated images often amplify existing stereotypes, particularly around race and gender. Despite efforts to mitigate these biases, there is no comprehensive testing framework that can identify all instances of bias in AI systems. As a result, many biases are discovered only through real-world use, underscoring the need for ongoing vigilance and adaptive strategies to address these challenges. This situation exemplifies the unpredictable second-order effects of GenAI on society and the workforce.

This 2024 article from The Verge highlights the pitfalls of Google's AI Overview feature, which has been known to generate misleading and sometimes dangerous advice. One notable example involved recommending the use of non-toxic glue to keep cheese from sliding off pizza, based on a decade-old joke from Reddit. This incident underscores the limitations of LLMs, which operate on probabilistic reasoning and next-token prediction. LLMs generate responses by predicting the most likely next word or phrase based on the input they receive, without truly understanding the context or verifying the accuracy of the information. This probabilistic approach can lead to errors, especially when the model encounters uncommon queries or jokes treated as facts.

NIST AI 600-1

Enter the NIST-AI-600-1, Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile. This publication is effectively the first "profile" published to apply the NIST AI Risk Management Framework (RMF) to a particular use-case.

From the paper:

A profile is an implementation of the AI RMF functions, categories, and subcategories for a specific setting, application, or technology – in this case, Generative AI (GAI) – based on the requirements, risk tolerance, and resources of the Framework user. AI RMF profiles assist organizations in deciding how to best manage AI risks in a manner that is well-aligned with their goals, considers legal/regulatory requirements and best practices, and reflects risk management priorities. Consistent with other AI RMF profiles, this profile offers insights into how risk can be managed across various stages of the AI lifecycle and for GAI as a technology.

Cool! The paper goes on to discuss different dimensions of AI risk. Since it is trying to be a broadly applicable paper and includes creators of GenAI as well as users of GenAI, they tried to cast a wide net.

- Stage of AI Lifecycle

Risks can arise during design, development, deployment, operation, and/or decommissioning.

- Scope

Risks may exist at individual model or system levels, at the application or implementation levels (i.e., for a specific use case), or at the ecosystem level – that is, beyond a single system or organizational context. Examples of the latter include the expansion of “algorithmic monocultures” resulting from repeated use of the same model, or impacts on access to opportunity, labor markets, and the creative economies.

- Source of risk

Risks may emerge from factors related to the design, training, or operation of the GAI model itself, stemming in some cases from GAI model or system inputs, and in other cases, rom GAI system outputs. Many GAI risks, however, originate from human behavior, including the abuse, misuse, and unsafe repurposing by humans (adversarial or not), and others result from interactions between a human and an AI system

- Time scale

GAI risks may materialize abruptly or across extended periods. Examples include immediate (and/or prolonged) emotional harm and potential risks to physical safety due to the distribution of harmful deepfake images, or the long term effect of disinformation on societal trust in public institutions.

What is interesting is the specific call out to "blame the user" for sources of risk for AI. They could have likely been cleaner with the language. In any system, the users can pose risk. This isn't more or less so with AI systems. In AI systems, it is not easy to build the same type (and quality) of guardrails to protect the system, and because of this it is likely easier to abuse and misuse the AI. This should be classified as a technology risk, not a "human" risk. Secondly, owners (who may be human) can place the AI in unsafe situations, for example, by authorizing use of narrow AI for general purposes. This risk should be called out separately and accountability for placing that system in the wrong context needs to be understood. Grouping all of those risks as "human" is frankly lazy.

The paper then goes on to enumerate several AI risks.

To guide organizations in identifying and managing GAI risks, a set of risks unique to or exacerbated by the development and use of GAI are defined below. Each risk is labeled according to the outcome, object, or source of the risk (i.e., some are risks “to” a subject or domain and others are risks “of” or “from” an issue or theme). These risks provide a lens through which organizations can frame and execute risk management efforts. To help streamline risk management efforts, each risk is mapped in Section 3 (as well as in tables in Appendix B) to relevant Trustworthy AI Characteristics identified in the AI RMF.

Here is a summary:

| Name | Description |

|---|---|

| CBRN Information or Capabilities | Eased access to or synthesis of materially nefarious information or design capabilities related to chemical, biological, radiological, or nuclear (CBRN) weapons or other dangerous materials or agents. |

| Confabulation | The production of confidently stated but erroneous or false content (known colloquially as “hallucinations” or “fabrications”) by which users may be misled or deceived. |

| Dangerous, Violent, or Hateful Content | Eased production of and access to violent, inciting, radicalizing, or threatening content as well as recommendations to carry out self-harm or conduct illegal activities. Includes difficulty controlling public exposure to hateful and disparaging or stereotyping content. |

| Data Privacy | Impacts due to leakage and unauthorized use, disclosure, or de anonymization of biometric, health, location, or other personally identifiable information or sensitive data. |

| Environmental Impacts | Impacts due to high compute resource utilization in training or operating GAI models, and related outcomes that may adversely impact ecosystems. |

| Harmful Bias or Homogenization | Amplification and exacerbation of historical, societal, and systemic biases; performance disparities between sub-groups or languages, possibly due to non-representative training data, that result in discrimination, amplification of biases, or incorrect presumptions about performance; undesired homogeneity that skews system or model outputs, which may be erroneous, lead to ill-founded decision-making, or amplify harmful biases. |

| Human-AI Configuration | Arrangements of or interactions between a human and an AI system which can result in the human inappropriately anthropomorphizing GAI systems or experiencing algorithmic aversion, automation bias, over-reliance, or emotional entanglement with GAI systems. |

| Information Integrity | Lowered barrier to entry to generate and support the exchange and consumption of content which may not distinguish fact from opinion or fiction or acknowledge uncertainties, or could be leveraged for large-scale dis- and mis-information campaigns. |

| Information Security | Lowered barriers for offensive cyber capabilities, including via automated discovery and exploitation of vulnerabilities to ease hacking, malware, phishing, offensive cyber operations, or other cyberattacks; increased attack surface for targeted cyberattacks, which may compromise a system’s availability or the confidentiality or integrity of training data, code, or model weights. |

| Intellectual Property | Eased production or replication of alleged copyrighted, trademarked, or licensed content without authorization (possibly in situations which do not fall under fair use); eased exposure of trade secrets; or plagiarism or illegal replication. |

| Obscene, Degrading, and/or Abusive Content | Eased production of and access to obscene, degrading, and/or abusive imagery which can cause harm, including synthetic child sexual abuse material (CSAM), and nonconsensual intimate images (NCII) of adults |

| Value Chain and Component Integration | Non-transparent or untraceable integration of upstream third-party components, including data that has been improperly obtained or not processed and cleaned due to increased automation from GAI; improper supplier vetting across the AI lifecycle; or other issues that diminish transparency or accountability for downstream users. |

The Suggested Actions

Keeping in line with the NIST AI Risk Management Framework (RMF), the suggested actions are broken up within the four domains.

The Govern function of the AI RMF focuses on the importance of establishing robust governance structures to manage AI risks effectively. From the doc:

After putting in place the structures, systems, processes, and teams described in the GOVERN function, organizations should benefit from a purpose-driven culture focused on risk understanding and management.

In this section you can expect to find high level statements that speak to organizational structures you should have, the fact that you should align to "regulations", and statements around culture.

The Map function focuses on building structures and processes to identify and understand AI risk. It has components that help organizations discuss the use of AI within the context of the organization. From the doc:

Implementation of this function is enhanced by incorporating perspectives from a diverse internal team and engagement with those external to the team that developed or deployed the AI system. Engagement with external collaborators, end users, potentially impacted communities, and others may vary based on the risk level of a particular AI system, the makeup of the internal team, and organizational policies

The Measure function focuses on creating appropriate measures to evaluate AI system performance and associated risks. It generally includes controls that employ a mix of quantitative, qualitative, or "mixed-method" tools, techniques, and methodologies to benchmark AI risk and monitor impacts. From the doc:

After completing the MEASURE function, objective, repeatable, or scalable test, evaluation, verification, and validation (TEVV) processes including metrics, methods, and methodologies are in place, followed, and documented.

The Manage function is the meat of the document, focusing on effectively implementing the other functions in practice. From the doc:

After completing the MANAGE function, plans for prioritizing risk and regular monitoring and improvement will be in place. Framework users will have enhanced capacity to manage the risks of deployed AI systems and to allocate risk management resources based on assessed and prioritized risks.

The rest of the document is effectively an attempt to apply the RMF described above along with the controls defined in the NIST AI RMF Playbook against the GenAI profile.

Let's take a look at an example to get a feel for the depth and considerations of the document. Many organizations, particularly when implementing Gen AI, do not put adequate monitoring and response mechanisms in place, so let's see what guidance NIST provides. The control I've chosen to look at is Manage 4.1

From the doc:

Manage 4.1: Post-deployment AI system monitoring plans are implemented, including mechanisms for capturing and evaluating input from users and other relevant AI Actors, appeal and override, decommissioning, incident response, recovery, and change management.

Under this section, the following suggested actions are presented.

MG-4.1-001: Collaborate with external researchers, industry experts, and community representatives to maintain awareness of emerging best practices and technologies in measuring and managing identified risks.

MG-4.1-002: Establish, maintain, and evaluate effectiveness of organizational processes and procedures for post-deployment monitoring of GAI systems, particularly for potential confabulation, CBRN, or cyber risks.

MG-4.1-003: Evaluate the use of sentiment analysis to gauge user sentiment regarding GAI content performance and impact, and work in collaboration with AI Actors experienced in user research and experience.

MG-4.1-004: Implement active learning techniques to identify instances where the model fails or produces unexpected outputs.

MG-4.1-005: Share transparency reports with internal and external stakeholders that detail steps taken to update the GAI system to enhance transparency and accountability.

MG-4.1-006: Track dataset modifications for provenance by monitoring data deletions, rectification requests, and other changes that may impact the verifiability of content origins.

MG-4.1-007: Verify that AI Actors responsible for monitoring reported issues can effectively evaluate GAI system performance including the application of content provenance data tracking techniques and promptly escalate issues for response.

Interesting. Hidden in a lot of these controls are the concepts of training/education/skills, sourcing "industry research", producing internal and external reporting, and a bit of AI red-teaming. If you stop to think about it a bit, you will probably notice that the suggested actions are extremely high-level and might be difficult (if not impossible) to apply in many contexts and it is ambiguous what "success" would even look like. We will circle back to this point later.

The Issues

I think there are a couple of issues to explore in relation to using the NIST RMF as a tool for responsible AI. Firstly, I think that the risk treatment approach to responsible AI will not ultimately be effective but may be the best approach we have today. Secondly, I think that the NIST RMF doesn't consider first and second order effects. Lastly, the NIST RMF suggested actions aren't actually actionable enough. Let's examine each in turn.

Ugh, Risk Treatment

It is a bit of an unfortunate state of our industry that we still believe risk management and treatment methodologies are adequate as an approach to dealing with harms. Risk management methodologies generally make the following assumptions:

- Risks are known, understandable, and can be enumerated/categorized

- Risk impacts are quantifiable (at least to an order of magnitude)

- Risk and harms can be translated and described in financial terms

- Risk likelihood can be measured even if probabilistic in nature

- All risks can be measured/monitored in the same fashion

- Risk mitigations actually decrease the likelihood and impact of adverse outcomes (and do not introduce other risks)

- Cost of mitigations can be calculated financially and weighed against risk tolerance, improvement, etc.

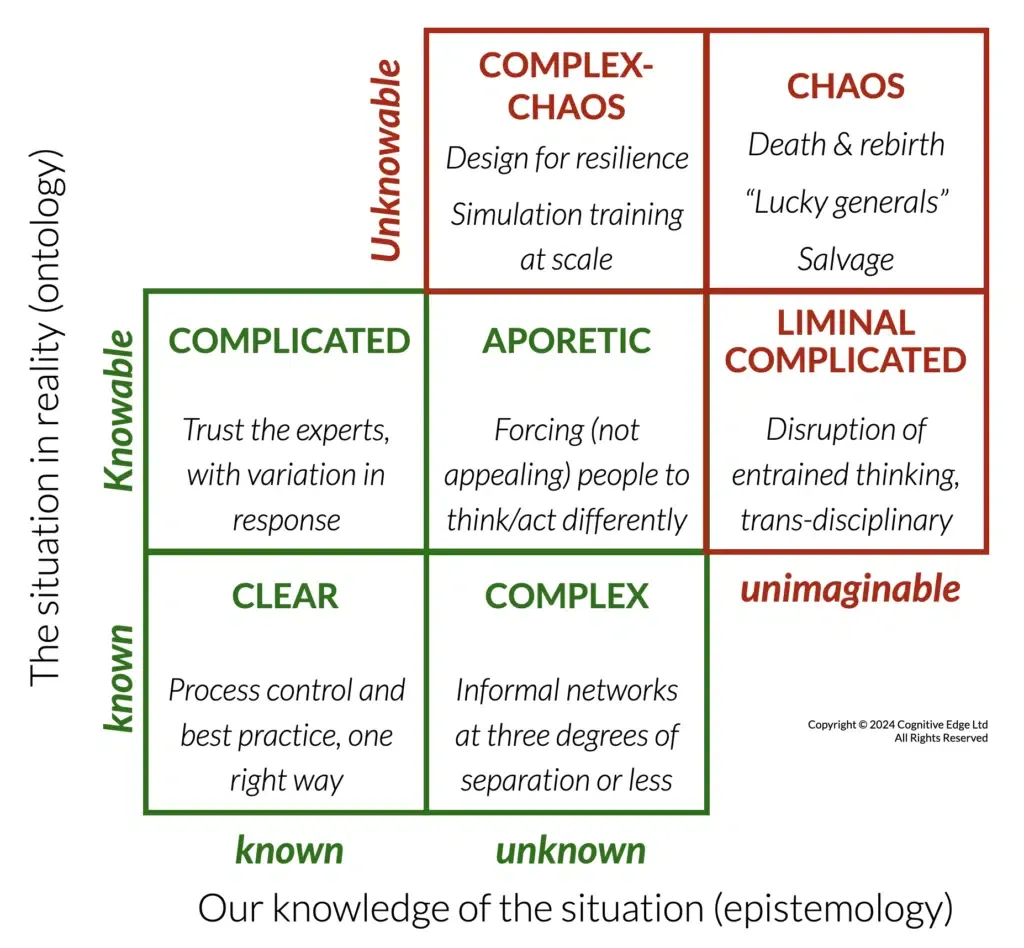

A good place to start to understand why this approach isn't adequate is to examine the uncertainty matrices.

I really like using this frame to discuss the range of harms/risks that need to be discussed. I would say traditional risk management really focuses only on the green boxes, and even then, the coverage isn't great. It is beyond the scope of this article to go into details of what could be done better, but you could probably intuitively understand that the "sum" of risks in the red boxes is likely more (in count and severity) than those in the green ones. If you want, do a bit of a thought experiment here. How many different colours can you imagine? How many different colours can you name? How many different colours do you think exist in the spectrum of colours that our eyes can perceive?

I will have to give some credit where credit is due. This particular NIST document does try and call out this deficiency in the pre-amble (read: the part that everyone skips) to the profile controls. From the doc:

Importantly, some GAI risks are unknown and are therefore difficult to properly scope or evaluate given the uncertainty about potential GAI scale, complexity, and capabilities. Other risks may be known but difficult to estimate given the wide range of GAI stakeholders, uses, inputs, and outputs. Challenges with risk estimation are aggravated by a lack of visibility into GAI training data, and the generally immature state of the science of AI measurement and safety today. This document focuses on risks for which there is an existing empirical evidence base at the time this profile was written; for example, speculative risks that may potentially arise in more advanced, future GAI systems are not considered. Future updates may incorporate additional risks or provide further details on the risks identified below.

The best way to read the above statement is that the document is a great look-back at what risks were 1-3 years before time of writing and are likely out of date as of time of publishing.

First and Second order effects

There have been a lot of advances in systems thinking since the stone-age time when risk methodologies were invented. One of the main "upgrades" to our way of thinking is starting to understand first and second order effects on systems of actions we take. First order effects can be thought of the immediate and direct consequences of the actions being taken. Second order effects can be thought of as the often unforeseen consequences that emerge over time, changing the system dynamics themselves and/or the governing/enabling constraints.

Examples of first-order effects:

- Job displacement

- Bias and Discrimination

- Privacy and Security risks

Examples of second-order effects:

- Societal shifts

- Alterations in org structures, skills balance, etc.

- Long-term biases

- Impact on critical thinking

Here is one easy way to think about this. Introducing GenAI into a workplace/process may increase speed and efficiency in the short term. The longer term effects could be that employees lose critical thinking skills, understand the process less and therefore cannot be used to intervene when necessary, and switch from "problem solver" to "problem overseer" causing a loss of joy and intrinsic motivation for the job. Since AI is only good (if you can consider it good at anything) at looking in the past and abstracting linear patterns, your organization may actually lose it's ability to be flexible and fluid in the future, leaving it open to adaptability risks. This is just one example.

There are no easy ways to quantify and predict these types of second-order effects. Not all of them are negative. Organizations like the partnership on AI have tried to create risk and opportunity "signals" which capture some second-order effects.

No depth of recommendations

For any of the recommendations you read within this profile document, ask yourself the following questions:

- What people, process, technology would I need just "lying around" in order to properly execute on the recommendation?

- What measures of success would I need to be looking at in order understand the effectiveness and impact of the control?

- What attributes of my current situation and context would change the need for the control and how it is implemented?

- To what level of quality do I need to implement a particular control?

For example, look at the following suggested action:

Devise a plan to halt development or deployment of a GAI system that poses unacceptable negative risk.

The action is written very much like a SOC control. The answer could literally be anything, and you would meet the control. In this case, there are no "audit standards" to use as a baseline to determine if the quality of the control meets industry minimums. Further, there are no links to further documentation to help even build that control.

I'm not saying all the controls are this bad, but it is important to read this document and understand that there is a lot left to be desired when determining how to implement these controls properly.

Conclusion

Developing a risk management standard for generative AI is no small feat, especially when the technology itself is evolving faster than our collective understanding, and the standard is being built by committee. The NIST AI RMF Generative AI Profile represents a thoughtful, earnest attempt to establish structure in a chaotic and high-stakes landscape. It successfully begins to organize known risks, calls for governance, and encourages the kinds of conversations that every organization deploying GenAI should be having.

However, as with all early frameworks in an emergent field, this is far from the final word. The complexity of GenAI defies neat categorization. Its risks aren't just technical, they are systemic, psychological, organizational, and societal. These risks evolve in nonlinear ways, and their full impacts are often obscured until long after deployment. Traditional risk treatment methods can feel too rigid or simplistic for such a dynamic environment, and the framework’s high-level recommendations, while directionally useful, frequently lack the granularity needed for real-world application.

Moreover, voices are missing. The strength of a standard like this depends on inclusivity: diverse disciplines, global perspectives, small and large organizations, technologists and non-technologists alike. We need the input of those impacted, not just those building and selling the technology. More participation will not only improve the breadth and relevance of the framework, but it will also help surface the “unknown unknowns” that any committee, no matter how well-intentioned, is bound to overlook.

The NIST GenAI Profile is a promising foundation. But it's just that, a foundation. The true value of this work will come not from static adoption, but from its evolution. To build a trustworthy, responsible AI future, we must treat this framework as a living document, constantly updated through lived experience, rigorous scrutiny, and the humility to acknowledge what we don’t yet understand. The work is ongoing, and it needs all of us.

Member discussion